Transformer ( Part 3: Transformer Architecture )

Encoder & Decoder

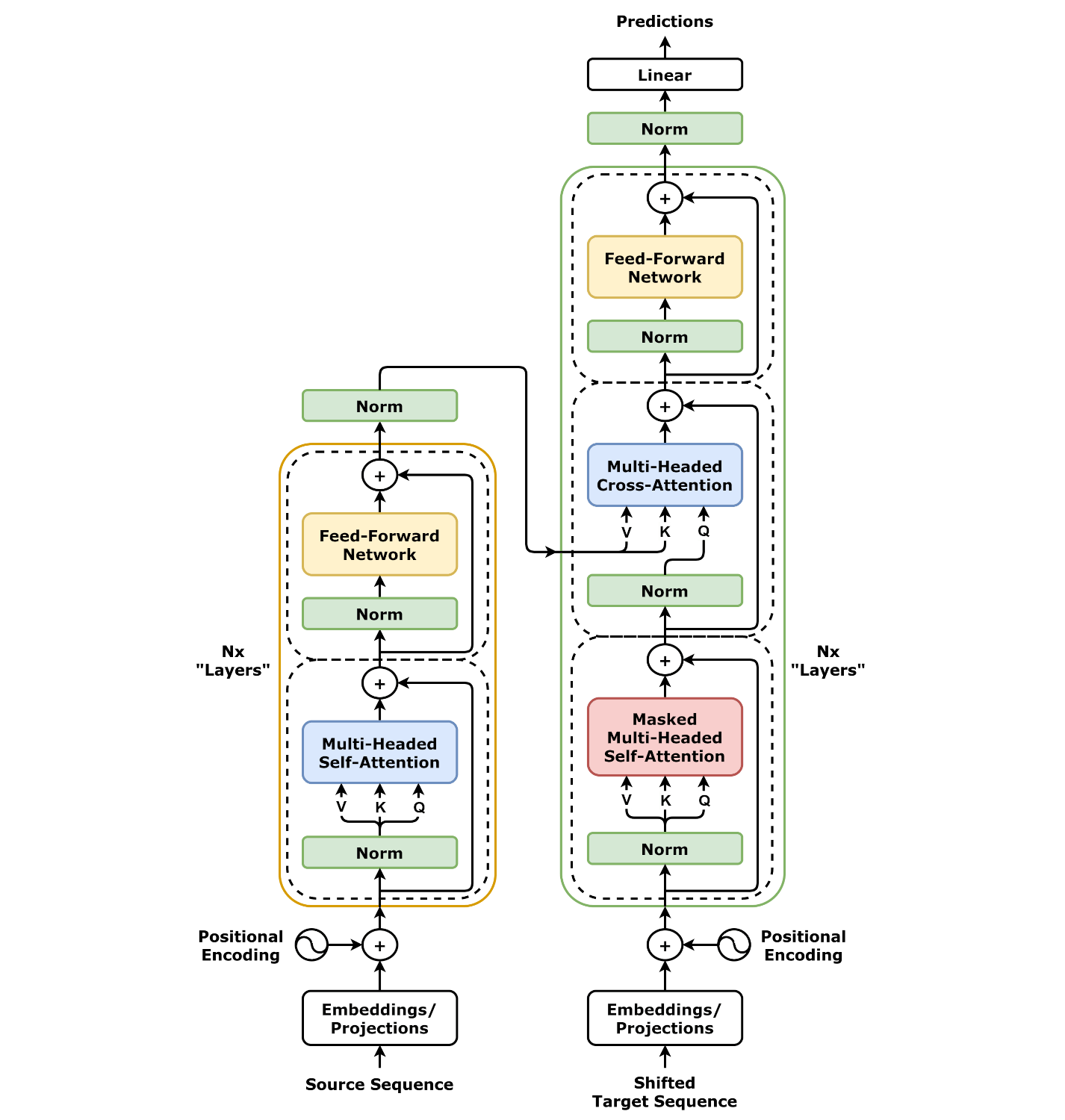

The Transformer consists of two main parts: an encoder and a decoder. They are connected by Cross-Attention.

- Encoder: Processes the input sequence using multiple layers of self-attention and feed-forward networks.

- Decoder: Takes the encoder’s output and generates the target sequence using self-attention and cross-attention mechanisms.

The Transformer Architecture: